Agents or Workflows?

The Truth Behind AI 'Agents'

Watch the video!

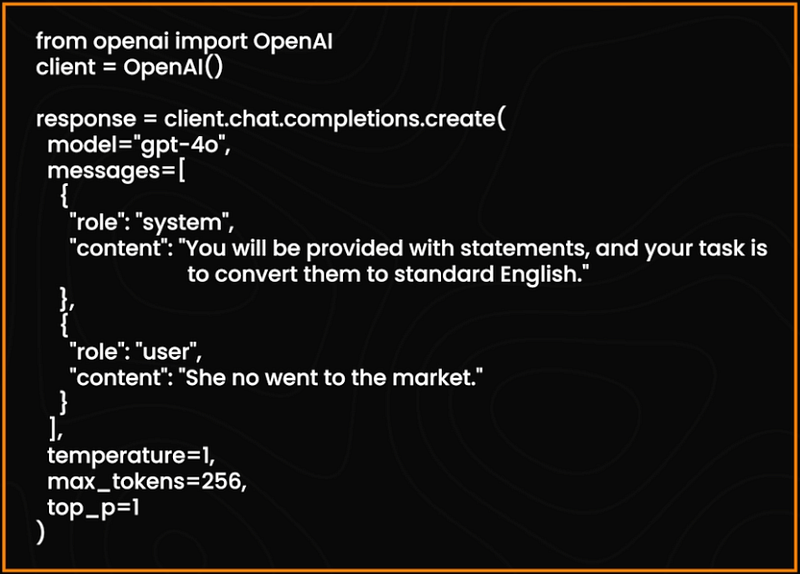

What most people call agents aren’t agents. I’ve never really liked the term “agent”, until I saw this recent article by Anthropic, where I totally agree and now see how we can call something an agent. The vast majority is simply an API call to a language model. It’s this. A few lines of code and a prompt.

This cannot act independently, make decisions or do anything. It simply replies to your users. Still, we call them agents. But this isn’t what we need. We need real agents, but what is a real agent?

So, let’s start over. We have an LLM accessed programmatically, which is through our API or accessed locally in your own server or machine, and then what? Well, we need it to take action or do a bit more than just generate text. How? By giving it access to tools and their documentation. We give them access to a tool like the ability to execute SQL queries in a database to access private knowledge. Specifically, we code all that ourselves to have the LLM generate SQL queries, and then our code would send and execute the query automatically in our database. We then send back the outputs so that it uses them to answer the user.

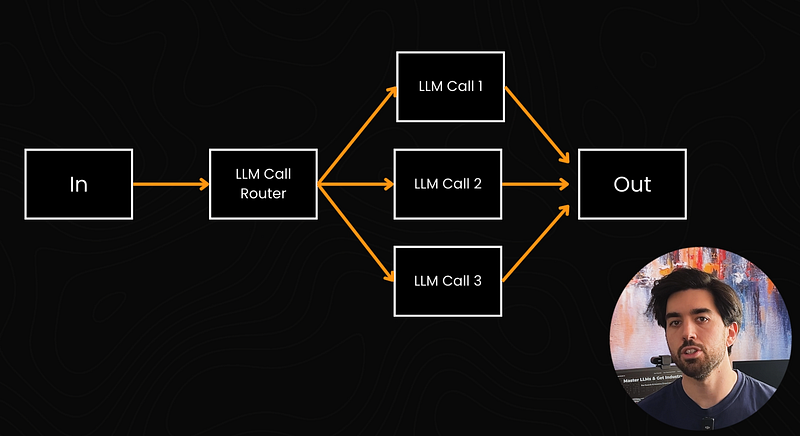

This is what another great proportion of people call agents. They are still not agents. This is simply a process, hard coded or with small variations like routers that we discuss in our course. Of course, it’s useful, and it’s super powerful. Yet, it’s not an intelligent being or something independent. It’s not an “agent” acting on our behalf. It’s simply a program we made and control. Or, as Anthropic called it, a workflow.

Don’t get me wrong. A workflow is pretty damn useful. And it can get quite complicated and advanced. We can implement intelligent routers to decide what tool to use and when to give it access to various databases, have it decide which one to query and when, have it execute tasks through action tools through code, and more. Plus, you can have as many workflows as you wish. Yet, I simply want to state how different it is than an actual agent. The type of agent we dream of, and the type Ilya mentioned in a recent talk at Neurips…

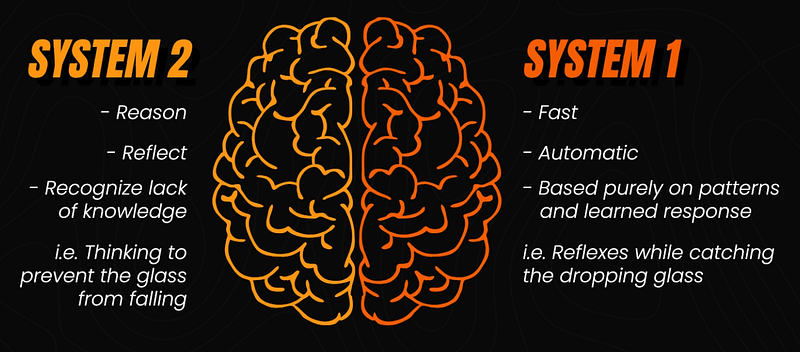

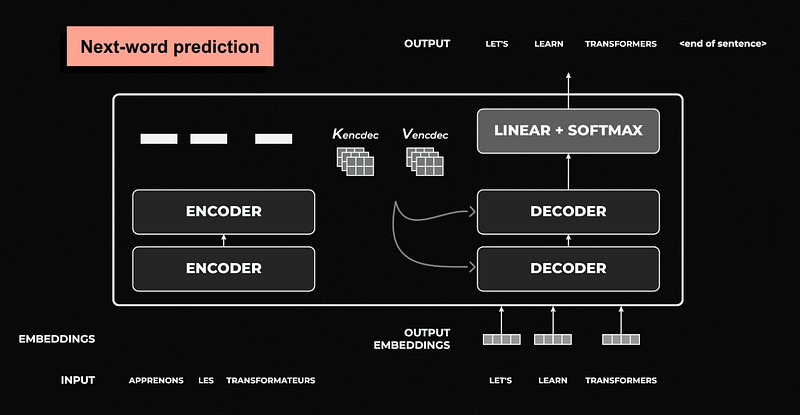

The next natural question might be: what exactly is a “real agent”? In simple terms, a real agent is something that functions independently. More specifically, it is something capable of employing processes like our System 2 thinking — able to genuinely reason, reflect, and recognize when it lacks knowledge. This is almost the opposite of our System 1 thinking, which is fast, automatic, and based purely on patterns and learned responses, like reflexes when you need to catch a dropping glass. By contrast, System 2 thinking might involve deciding whether to prevent the glass from falling in the first place, perhaps by using a nearby tool like a tray or moving a fragile object out of the way. A real agent, then, would not only know how to use tools but also decide when and why to use them based on deliberate reasoning. OpenAI’s new o1 and o3 series exemplify this shift, as they begin exploring System 2-like approaches and try to make the models “reason” by first discussing with themselves internally, mimicking a human-like approach to reasoning before speaking. Unlike traditional language models that rely on next-word (or next-token) prediction — essentially a System 1 instant-thinking mechanism, purely based on what it knows and learned to guess the next instant thing to do with no plan — these new models aim to incorporate deeper reasoning capabilities, marking a move toward the deliberate, reflective thinking associated with System 2. Something required for a true agent to be. But we are diverging a bit too much with this Kahneman parenthesis. Let me clarify what I mean by a real agent by going back to workflows and what they really are….

Workflows follow specific code lines and integrations and, other than the LLM’s outputs, are pretty predictable. They are responsible for most of the advanced applications you see and use today and for a reason. They are consistent, more predictable and incredibly powerful when leveraged properly. As Anthropic wrote, “Workflows are systems where LLMs and tools are orchestrated through predefined code paths.”

Here’s what a workflow looks like. We have our LLM, some tools or memory to retrieve for additional context, iterate a bit with multiple calls to the LLM, and then an output sent back to the user. As we discussed, when a system needs to sometimes do a task and sometimes another, depending on conditions, workflows can use a Router with various conditions to select the right tool or right prompt to use. They can even work in parallel to be more efficient. Better, we can have some sort of a main model, which we refer to as an orchestrator, that selects all the different fellow models to call for specific tasks and synthesize the results, such as our SQL example where we’d have a main orchestrator getting the user query, and could decide if it needs to query the dataset or not, and if it does, asks the SQL agent to generate the SQL query, query de dataset, get it back and synthesize the final answer thanks to all information provided. This is pretty much like the ChatGPT interface, sometimes leveraging Canvas or using a code interpreter to answer your needs better. Even if complex and advanced, it is still all hardcoded. If you know what you need your system to do, you need a workflow. However advanced it may be.

For instance, what CrewAI calls agents function like predefined workflows assigned to specific tasks, while Anthropic envisions an agent a single system capable of reasoning through any task independently. Both approaches have merit: one is predictable and intuitive, while the other aims for flexibility and adaptability. However, the latter is far harder to achieve with current models and better fits an agent definition to me.

So, about those “real” agents…

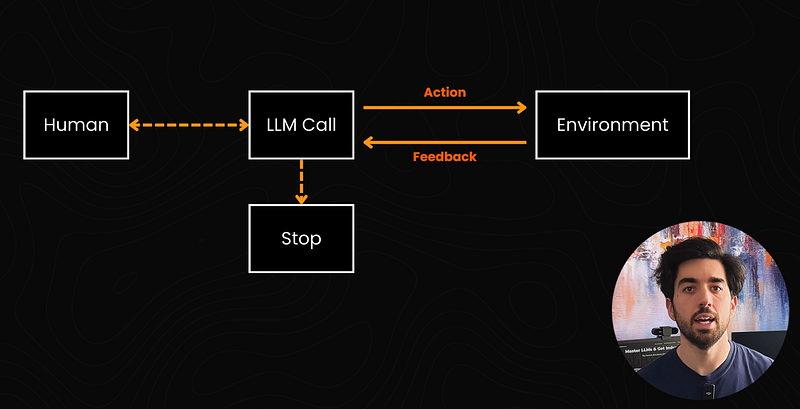

Agents “are systems where LLMs dynamically direct their own processes and tools usage, maintaining control over how they accomplish tasks” themselves. This is what Anthropic wrote, and it is what I agree with. Real agents make a plan by exchanging with you and understanding your needs, iterating at a “reasoning” level to decide on the steps to take to solve the problem or query. Ideally, it will even ask you if it needs more information or clarification instead of hallucinating, as with current LLMs. Still, they can be simply built. They require a very powerful LLM, better than those we have now, and an environment to evolve it, like a discussion with you and some extra powers like tools that they can use themselves whenever they see fit and iterate. In short, you can see agents almost as replacing someone or a role and a workflow replacing a task one would do. There is no hardcoded path. The agentic system will make its decisions. They are much more advanced and complex things that we still haven’t built very successfully yet.

This independence and trust in your system obviously make it more susceptible to failures, more expensive to run and use, add latency and, worst of all, the results aren’t that exciting now. When they are, they are completely inconsistent.

So, what is an actual good example of an agent? Two examples quickly come to my mind are Devin’s and Anthropic’s computer use. Yet, they are, for now, disappointing agents.

If you are curious about Devin, there’s a really good blog from Hamel Husain sharing his experience using it. Devin offers an intriguing glimpse into the promise and challenges of agent-based systems. It is designed as a fully autonomous software engineer with its own computing environment and independently handles tasks like API integrations and real-time problem-solving. However, as Hamel’s extensive testing demonstrated, while Devin excelled at simpler, well-defined tasks (those we can do easily), it struggled with complex or ambiguous ones, often providing over-complicating solutions or pursuing infeasible paths, whereas advanced workflows like Cursor don’t have as many issues. These limitations reflect the broader challenges of building reliable, context-aware agents with current LLMs, even if you raise millions.

Here, Devin aligns more with Anthropic’s vision, showcasing the promise and challenges of a reasoning agent. It can autonomously tackle complex problems, but struggles with inconsistency. By contrast, workflows like those inspired by CrewAI are simpler and more robust for specific tasks, but lack the flexibility of true reasoning systems.

Similarly, we have Anthropic’s ambitious attempt at creating an autonomous agent having access to our computer, which had lots of hype but has since been quite forgotten. The system was undeniably complex and embodied the characteristics of a true agent — autonomous decision-making, dynamic tool usage, and the ability to interact with its environment. Its goal was also to replace anyone on a computer: quite promising (or scary)! Still, its decline also serves as a reminder of the challenges in creating practical agentic systems that not only work as intended but do so systematically.

In short, LLMs are simply not ready yet for becoming true agents. But it may be the case soon.

For now, as with all things code-related, we should always aim to find a solution to our problem that is as simple as possible. One, we can iterate easily and debug easily. Simple LLM calls are often the way to go. And it is often what people and companies sell as being an “agent,” but you won’t be fooled anymore. You may want to complement LLMs with some external knowledge through the use of retrieval systems or light fine-tuning, but your money and time aiming for true agents should be saved for really complex problems that we cannot solve otherwise.

I hope this article helped you understand the difference between workflows and a real agent and when to use both. If you found it helpful, please share it with your friends in the AI community and don’t forget to subscribe for more in-depth AI content!

Thank you for reading!

Links;

Anthropic’s blog on agents: https://www.anthropic.com/research/building-effective-agents

Anthropic’s computer use: https://www.anthropic.com/news/3-5-models-and-computer-use

Hamul Husain’s log on Devin: https://www.answer.ai/posts/2025-01-08-devin.html