DeOldify: Colorize your Black & White Photos with AI

This method is called DeOldify and works on pretty much any picture. If you don’t believe me, you can even try it yourself for free, as I will show in this article.

Watch the video and support me on YouTube!

DeOldify is a technique to colorize and restore old black and white images or even film footage. It was developed and is still getting updated by only one person Jason Antic. It is now the state of the art way to colorize black and white images, and everything is open-sourced, but we will get back to this in a bit.

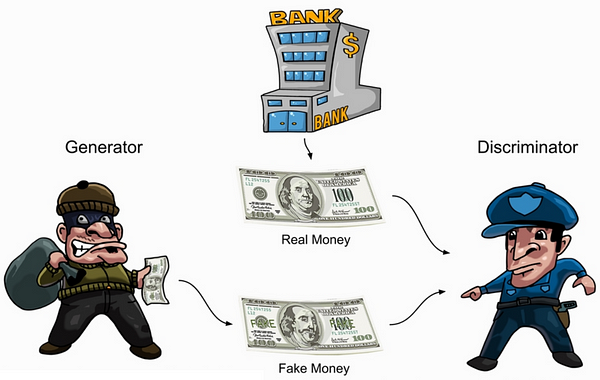

First, let’s see how he achieved that. It uses a new type of GAN training method called NoGAN that he developed himself to solve the main problems that appeared when training using a normal adversarial network architecture composed of a discriminator and a generator. Typically, GAN training works by both training the discriminator and generator at the same time, where the generator starts by being completely random and improves over time to fool the discriminator, which tries to tell if the image is generated or real. If this was just completely abstract to you, I invite you to watch the video I made about GANs:

His new method, which he calls the “NoGan”, provides the same benefits of this usual GAN training while having to spend way less time training the GAN architecture, which is typically pretty heavy in computation time. Instead, he pre-trains the generator to make it already more powerful, fast, and reliable using a regular loss function.

This is done by training the generator like a regular deep network’s architecture, such as ResNet. That way, the model is already pretty good at colorizing an image before training the complete GAN architecture. Then, it only needs a short amount of this typical generator-discriminator GAN training to optimize the “realism” of the generated pictures.

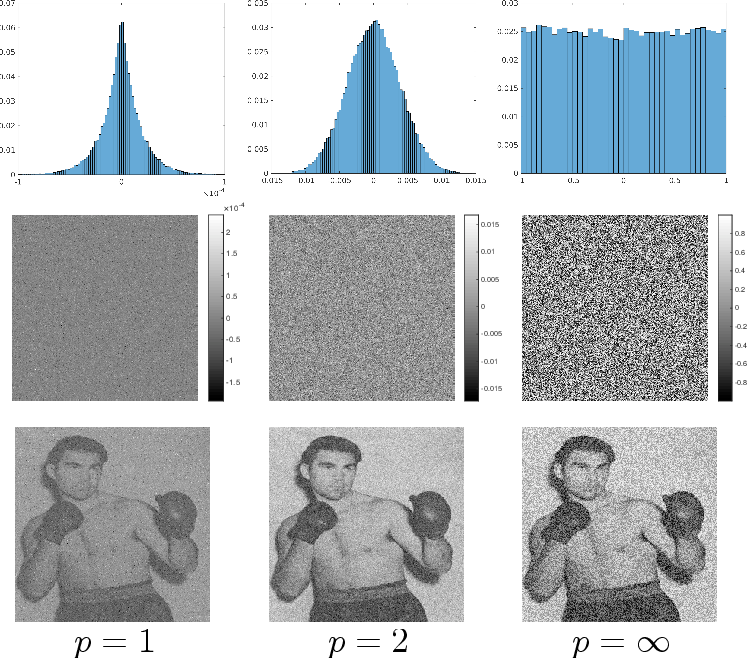

Gaussian noise is also randomly applied to images to generate fake noise during training.

This is a type of data augmentation that can be performed on the training images to improve the results and resistance to noisy inputs, using the same technique as style transfer, where the noise would be the style of the image we want to copy and can be applied more or less to the transformation.

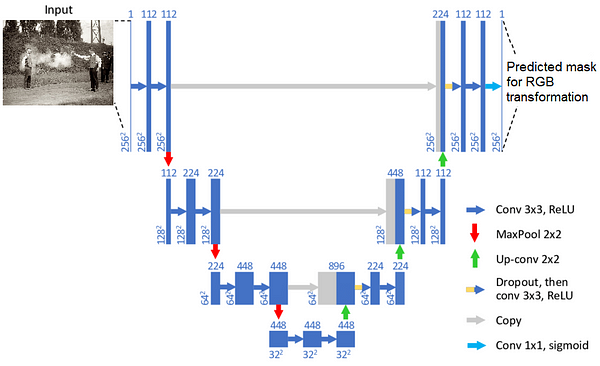

The whole architecture uses a basic ResNet backbone on a U-Net. Where the generator network in the GAN training is the U-Net architecture. Right now, there is no complete explanation of how this works, but the author is currently working on a paper about DeOldify, where he will further investigate why and how his technique, previously found only by trials and errors work.

You can find three things in the references below. At first, there’s the GitHub link with a complete detailed explanation of the technique and even google colab tutorials to use it yourself. Then, you can find a free API on DeepAI using DeOldify where you can simply click and try yourself. Finally, the third link is the most advanced version of DeOldify if you are looking for the best results. It is on MyHeritage’s website and is paid to use.

If you like my work and want to support me, I’d greatly appreciate it if you follow me on my social media channels:

- The best way to support me is by following me on Medium.

- Subscribe to my YouTube channel.

- Follow my projects on LinkedIn

- Learn AI together, join our Discord community, share your projects, papers, best courses, find Kaggle teammates, and much more!

References:

GitHub with full code, in-depth explanation, and Colabs: https://github.com/jantic/DeOldify

Free DeepAI image colorization API using DeOldify: https://deepai.org/machine-learning-model/colorizer

MyHeritage colorization tool (the best version of DeOldify available, paid): https://www.myheritage.com/incolor