Best Practices for Building and Deploying Scalable APIs in 2025

API Deployment for AI Engineers

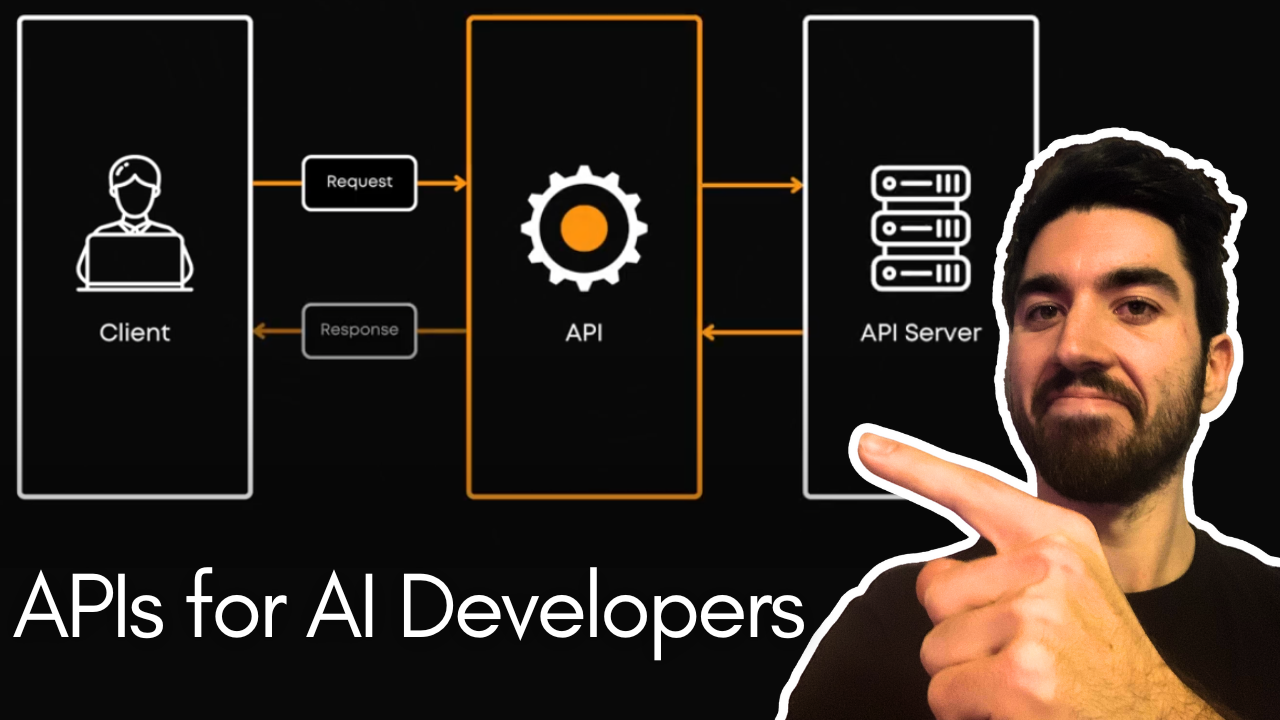

When we talk about building powerful machine learning solutions, like large language models or retrieval-augmented generation, one key element that often flies under the radar is how to connect all the data and models and deploy them in a real product. This is where APIs come in.

In this article, we’re diving into the world of APIs — what they are, why you might need one, and what deployment options are available.

Watch the video

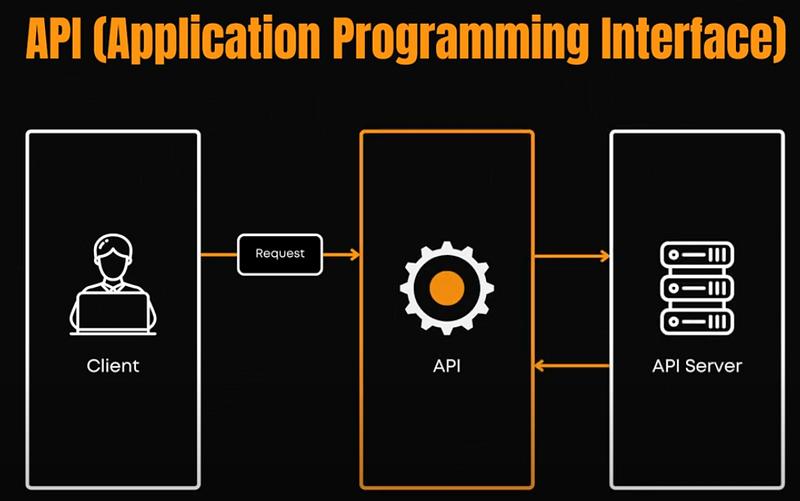

So, what exactly is an API?

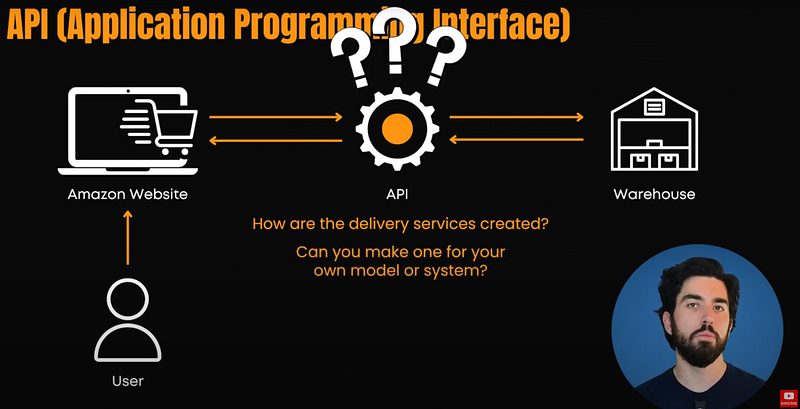

An API (or Application Programming Interface) is a bridge that allows two software applications to communicate with each other. For example, if you are ordering something online, the online store’s website, like Amazon, is like the application you interact with, and the warehouse is the service that holds all the products. In this case, the API is like a delivery service that sends your request (which is what you’re ordering) to the warehouse and brings the items back to the website for checkout.

In this way, APIs work like delivery services, allowing different software systems to “deliver” data or services to each other instead of physical products, as in our Amazon example. It’s just a messenger or a delivery service. As a customer, you don’t need to know how the warehouse organizes its inventory; you just need the API to handle your request and give you the right result. This is how all developers use models like GPT and Claude now. Not being able to know what the model is or how it works, but able to communicate with it sending requests and getting its outputs. But how are these delivery services created? Can you make one yourself for your own model or system for others to use? Of course.

There are multiple steps in an end-to-end API lifecycle:

- Define: First of all, define your goals — figure out what the API should do, who’s going to use it, and how to keep it secure. You can also start setting up a workspace where the team can collaborate and store their progress, usually with tools like GitHub.

- Design: Next, it’s time to design the API’s structure — how it will handle and expose data to users. The design is captured in a standardized format (like OpenAPI) so both machines and people can understand it. It’s crucial to establish design rules here, from naming conventions to how data will be formatted, such as if it can only receive text and send back text or works with dictionaries or another kind of data. This ensures consistency across all APIs.

- Develop: Now, the actual coding begins! You or a developer need to write the code that brings the API to life, managing versions again through tools like Git.

- Test: Next, it’s time to catch the bugs before your users do! Testing is key to ensuring everything works correctly. Some tests might be run manually, while others are automated through pipelines to catch issues early before moving into production. ChatGPT or Claude are pretty useful to help setup those.

- Secure: At this point, the API is tested for vulnerabilities. The goal here is to ensure that only authorized users can access the API and its data.

- Deploy: Once everything checks out, the API is ready to be deployed. This means making it available in different environments like development, staging, or production.

- Observe: After deployment, it’s important to monitor the API’s performance in real-time. Not just monitoring your model’s outputs but the API and its usage as well. Teams can identify and fix issues like slow response times or security vulnerabilities before they cause problems for users.

- Distribute: Finally, the API is made easily discoverable by using API catalogues, either internally or publicly, so that consumers can find and use it. This stage isn’t the end though — APIs often go through updates and improvements based on user feedback, starting the lifecycle over again. From that point, you have a product and need to maintain and improve it!

Before you build and deploy an API, it’s important to ask yourself whether you really need one.

Deciding whether you need an API boils down to the scope and needs of your project. Let’s go back to the analogy of thinking of an API as a bridge — it connects different parts of your system or different platforms to one another. So, the real question is: Do you need that bridge?

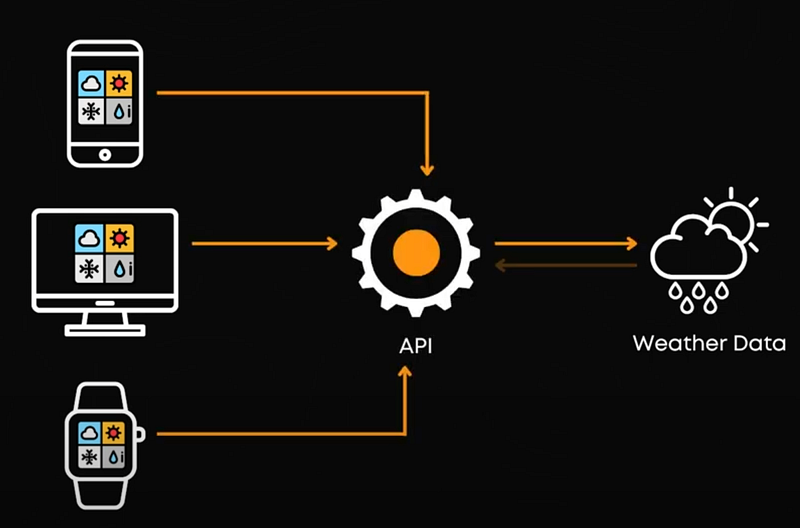

Let’s walk through some scenarios where using an API makes a lot of sense. If you’re building something that needs to work across multiple platforms — say, a web app, a mobile app, and maybe even a desktop app — and they all need to access the same data, then an API is crucial. For example, imagine a weather app that runs on your phone, your computer, and even a smartwatch. You’ll want to serve the same weather data to all of them, and an API makes that easy by acting as a central hub that everything connects to.

Another situation where you’d need an API is when you have to integrate with external services. Let’s say you’re building an e-commerce app and need to process payments through Stripe or PayPal. You don’t have to reinvent the wheel — you just plug into their API, and your app can easily handle secure payments. That’s what we did for our courses and the ebook using Stripe’s API. This is one of the major reasons companies use APIs — they let you leverage the work that’s already been done by others and integrate powerful features without building them from scratch. Likewise, you don’t have to reinvent GPT-4o, you can just use their OpenAI’s API for much cheaper.

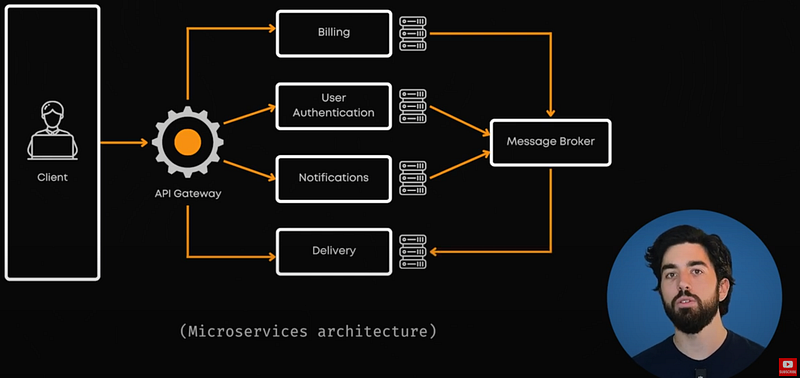

Now, if you’re expecting your project to grow and handle a lot of traffic, or if you want to build out different services that need to communicate with one another, an API will help you scale. This is particularly useful in microservices architectures, where different parts of your app, like billing, user authentication, or notifications, work as separate pieces but still need to talk to each other.

However, not every project needs an API. If you’re just building a simple website that’s only accessible from one platform, and there’s no need for external data or cloud services, adding an API might be overkill. For example, if you’re setting up a blog or a static portfolio website, you can manage everything within a single codebase without the added complexity of an API.

Similarly, if your app doesn’t need to communicate with any external platforms or sync data from the cloud, like a basic to-do list that saves data on your local device, you can skip the API altogether. In this case, your app can function just fine without that extra layer of complexity.

In short, if your project involves multiple platforms, external services, or scalable services, an API is a must. It’s also something to learn if you’ll end up coding for work. But if you’re building something small and simple that doesn’t need to talk to other apps or platforms, you can save yourself some time and skip it. The key is keeping things as simple as possible for your specific needs.

Once you’ve decided to build an API, the next question is where to deploy it.

There are various platforms to choose from depending on your needs and preferences. Let’s break down a few of the most popular options in 2024, highlighting the differences between the two main ways of deploying APIs, which are serverless and non-serverless deployments.

Serverless vs. Non-Serverless

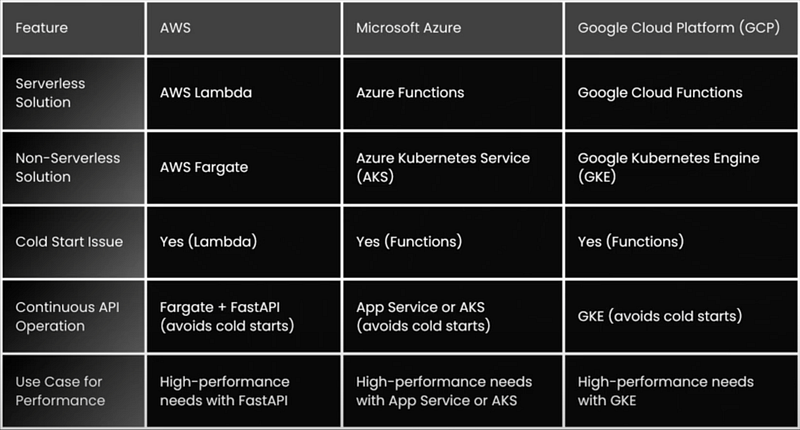

Serverless architecture refers to a cloud computing execution model where the cloud provider automatically manages the infrastructure. If you don’t want to worry about provisioning, scaling, or maintaining servers, serverless platforms, like AWS Lambda, Azure Functions, and Google Cloud Functions, are perfect, automatically scaling based on the number of requests. However, serverless functions are subject to cold starts, which cause delays when the function has been idle for a period of time.

In contrast, non-serverless (or traditional) architectures involve managing your own infrastructure, whether on physical servers, virtual machines, or containerized environments. Containers, such as those managed with Docker, allow applications (including APIs) to run in isolated environments, ensuring consistency across different deployment environments. These containers can be orchestrated using tools like Kubernetes, where APIs remain always-on, avoiding cold start issues but requiring more management in terms of scaling and infrastructure.

Platform Breakdown

When considering platforms for deploying your APIs, an interesting option is FastAPI, a high-performance web framework for building APIs with Python. Known for its speed and ease of use, FastAPI is ideal for applications requiring low latency and high throughput. It works particularly well in containerized, non-serverless environments, ensuring that APIs remain responsive without the cold start delays seen in serverless functions.

Now, regarding the actual platforms to deploy your APIs…

People use the three big names based on their preferences: Amazon, Microsoft and Google.

Amazon Web Services (AWS) is a leading cloud provider for scalability and integration with other cloud services. AWS Lambda allows for serverless deployment of APIs, as discussed. For applications needing real-time responsiveness, non-serverless solutions like AWS Fargate (for containers) are better suited. Combining FastAPI with Fargate ensures APIs run continuously without the latency associated with cold starts, making it a strong choice for high-performance needs.

Microsoft Azure offers both Azure Functions for serverless API deployment and Azure Kubernetes Service (AKS) for non-serverless container-based deployments. Azure Functions may experience cold starts like AWS Lambda, but for high-performance needs, Azure App Service or AKS can run APIs continuously.

Google Cloud Platform (GCP) is another popular cloud service known for its advanced AI and machine learning capabilities. For serverless APIs, Google Cloud Functions allows easy deployment, but cold starts can still be a challenge for latency-sensitive applications. To avoid this, Google Kubernetes Engine (GKE) is a non-serverless solution that ensures APIs run constantly, avoiding cold start delays.

For developers seeking a balance between simplicity and cost, DigitalOcean offers both serverless and non-serverless options as well, under the **Paperspace** name for machine learning. DigitalOcean Functions provide a serverless solution, and DigitalOcean App Platform or Droplets (which are virtual machines) are better suited to avoid latency.

Heroku is known for its simplicity in deploying applications, offering a Platform-as-a-Service (PaaS) where developers can quickly deploy APIs.

For mobile and web apps that require real-time databases, Firebase is a nice option. Firebase Cloud Functions enable serverless API deployment, which is ideal for apps needing easy integration with Firebase’s real-time databases. However, like other serverless platforms, Firebase functions can experience cold starts. If your application is more latency-sensitive, Firebase may not be the best for APIs needing constant uptime, but it’s great for background processing and less time-sensitive functions.

Cold Start vs. Warm Start: When It Matters

Just to come back to this important difference, when using serverless architectures like AWS Lambda, Azure Functions, or Google Cloud Functions, cold starts happen after a period of inactivity, causing a slight delay when the function is first invoked. This is typically not an issue for occasional background tasks but can be problematic for real-time or high-performance APIs. To avoid these delays, many developers turn to non-serverless (or “always-on”) solutions, where APIs are hosted in containers or on virtual machines, ensuring they are ready to respond instantly at all times, but the costs are higher here as it’s always running.

Each of these platforms offers robust features, but your choice will depend on your project’s scale, complexity, and performance requirements. If you need serverless, highly scalable options, AWS, Azure, and Google Cloud are excellent choices. I personally used both AWS and GCP. For applications that need minimal latency and high performance, using FastAPI in a non-serverless, containerized environment might be the best fit. Otherwise, for quick proofs-of-concept or initial versions, a quick serverless deployment might be perfect and reduce a lot of the costs.

Ultimately, there’s no one-size-fits-all solution for deploying your API. The right choice depends on factors like scalability, budget, and the specific needs of your project.

Building, deploying, and managing an API is a crucial step for scaling products, connecting systems, and making sure everything communicates seamlessly. But it’s important to consider whether an API is necessary for your project. While it’s a relevant skill to have, the most important skill is to know what tools to use and when to use them. If you’re working across multiple platforms or need to integrate external services, an API is a must. But if your project is small and doesn’t need to interact with other systems, it’s perfectly fine to keep it simple.

Once you decide you need an API, there are many platforms available to deploy it, each catering to different project needs — from AWS for scalable services to Firebase for mobile development. Understanding your options will help you choose the best fit for your project’s goals.

We discuss APIs and use and create them in much more depth with applied examples in our RAG course.

Thank you for reading the article throughout the end, and I hope you found it useful!