Find the Best AI Model for Your Specific Task

No-code Custom LLM Evaluation Demo

Watch the video (mainly for the tutorial!):

Good morning, everyone! This is Louis-François, CTO and co-founder of Towards AI. Today, I want to dive into an important topic: why not all Large Language Models (LLMs) are equal? In fact, treating them all the same can lead to missed opportunities.

Let’s see why ChatGPT shouldn’t be the only tool in your box, especially when we have an evolving lineup of diverse LLMs, each with unique strengths.

In this article, we will break down how to choose the best model for the job and then, in our practical section, use an awesome, easy, no-code benchmark tool built by Integrail to compare LLMs and even use the multi-agent system that we built in the previous article that draws from multiple models in parallel.

What Are the Best LLMs and How Do We Know?

So, what makes one LLM “better” than another? Well, it depends on what you’re looking for: accuracy, speed, cost, or something else. Evaluating LLMs can be a challenge because new models are constantly being released, and the best options shift frequently. In a recent post, Clementine Fournier from Hugging Face highlighted the importance of evaluation metrics and practical use cases. We also know from my previous articles that evaluation isn’t just about one benchmark — it’s about assessing across multiple dimensions.

Remember, an LLM is not the same thing as the system or app that runs it. ChatGPT, for example, is an interface for GPT models, but it’s not the only way to leverage those capabilities. This distinction becomes really important when we think about which model is best for which job.

Although LLMs share similar core functionalities, they vary significantly in performance, cost, and best use cases. Relying solely on one system like ChatGPT may mean that you’re not getting the most efficient or cost-effective solution. Think of it like a toolbox — just as you wouldn’t use a screwdriver for every fix, you shouldn’t use just one LLM for all your needs.

Different LLMs Have Different Strengths

Performance Variations

Let’s talk about some differences between popular LLMs. For example, models like GPT-4o might lead the pack in certain accuracy benchmarks, while smaller models like llama 8B can be more efficient and much cheaper for easier tasks. Accuracy, speed, cost — each of these factors impacts which model is best for a given situation.

For example:

- Claude 3.5 Sonnet by Anthropic is designed to handle complex conversational tasks and reason through questions. I really love it for coding and writing.

- Llama 3 by Meta is the latest version of the Llama series, with models ranging up to 405 billion parameters. It has an expanded context length of 128,000 tokens, making it particularly effective for handling long-form text, maintaining context, and performing complex reasoning tasks efficiently.

- Gemini is ideal for managing longer documents and maintaining context over extended inputs, making it suitable for tasks involving extensive content.

- GPT-4o from OpenAI often shines in generating coherent, human-like text and complex problem-solving, but at a higher cost. Of course, O1 is also amazing but has much more latency and higher costs.

- Code Llama is a specialized model for code generation, with variants fine-tuned for Python and other coding tasks, making it a great option for developers looking to streamline their workflows.

You can think of these models like specialists — one may be better at reasoning, another at generating creative content, and a third at delivering results quickly and affordably. For tasks like coding or complex reasoning, GPT-4o or Claude 3.5 Sonnet might be your go-to. But for simpler generation or answering basic questions, a lighter model like Llama or Gemini-Flash might be a smarter choice.

When you rely on a single model, you risk missing out on advantages like cost savings or the ability to handle specific tasks more effectively. Not to mention the challenge of downtime! Recently, Integrail mentioned the importance of testing models before migrating, especially considering OpenAI’s upcoming discontinuation of older versions of GPT-3.5. Switching to a different LLM could save a lot of trouble during such transitions.

Practical Demo: Comparing LLMs Using Integrail’s Benchmark Tool

Now, let’s move to the practical demo using Integrail’s Benchmark Tool to compare different LLMs side by side. First, you can easily compare LLMs side by side, which is super useful for quality checks or “vibe checks”. You always need to check answers and do some edge case tests yourself!

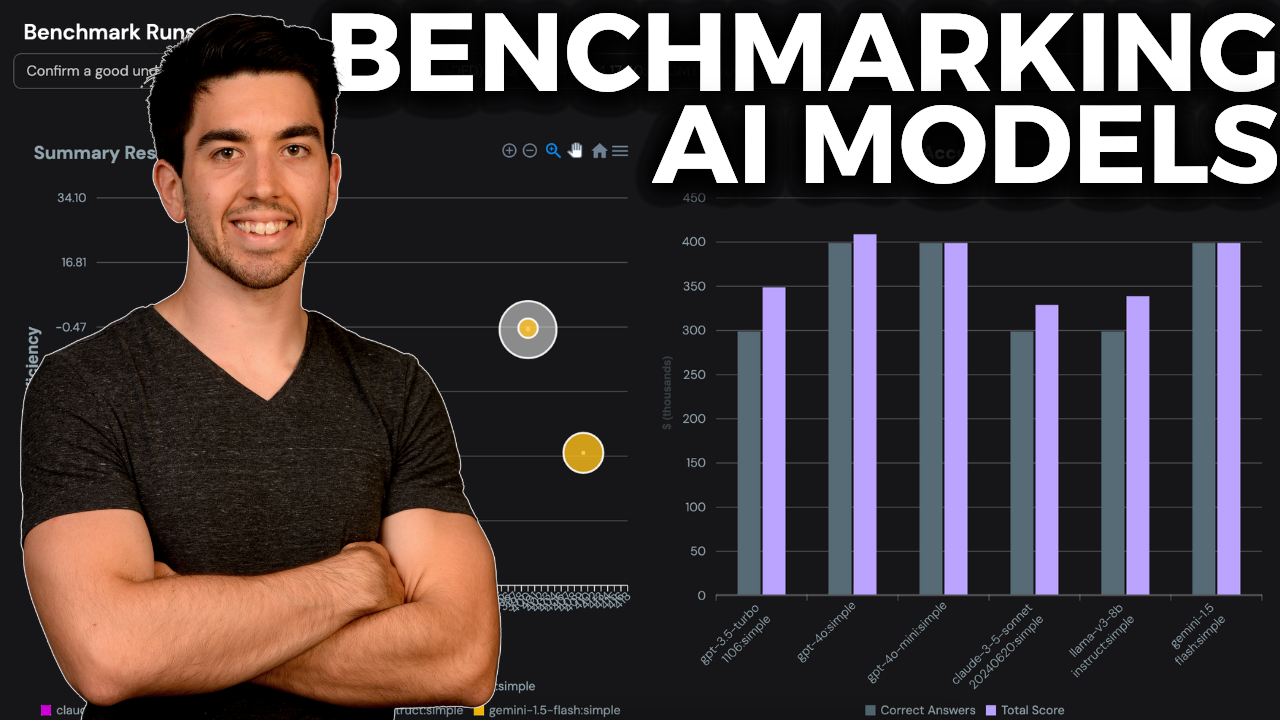

Then, there’s the amazing part. Integrail has built its own Benchmark Tool, which provides an easy way to evaluate how various models perform on the same task, comparing factors like accuracy, cost, and speed.

You just create your benchmark with questions and answers the LLM should provide. In my case, it was the understanding of the MetaGPT paper, which I sent to the system we built in the previous article to extract relevant information and generate social posts out of it. Here, I created questions and answers to confirm the model’s understanding of the paper. But you can create any custom evaluation you want to do.

Then, you simply select all models you want to test like that and run. Finally, this is the nice part: you can grade all the results. They’ve built their internal fine-tuned model to be able to grade results and even better explain its grading. It will go through all the models’ answers and grade them with an explanation for each grading. You can finally get an overall idea of what model performs best for your task and dive in more to see why it generates better results. You can also take the time to understand the results and whether these are really what you want.

For instance, as you see here, sometimes models generate responses that are way too long, which biases the evaluation to mark it as a good answer as it contains all relevant information where a simple bullet list would’ve worked. This is why it’s important to manually check or add such details to your evaluation criteria to look out for this.

Here, we see that GPT-4o is still outperforming all other models for this task, which isn’t that surprising.

Advantages of Using Multiple LLMs

Ok, and to wrap things up with using various LLMs, there are clear advantages to using multiple LLMs in your workflow instead of trying to pick the average best for all tasks. You can get the best performance for each part of a task by leveraging the strengths of different models. For example, Claude might be better at generating creative content, whereas Gemini is ideal for maintaining context over longer inputs. This also helps manage model downtime, ensuring that you’re not reliant on a single API.

Remember, even though Integrail’s benchmark tool is super useful, LLM evaluation isn’t just about using one benchmark. It’s ideal to assess models with multiple evaluations like Big-Bench, Truthful QA, MMLU, Word-in-Context, and the LMSys Arena. Using multiple benchmarks gives you a well-rounded view of the strengths and limitations of each model.

Creating a Multi-Agent Application for Multiple LLM Responses

What is a Multi-Agent System in This Context?

Now, let’s take this a step further by creating a system that asks multiple LLMs to solve a task in parallel, ensuring you get the best answer possible — kind of like having a team of specialists working together. This is where multi-agent applications come into play. With tools like Integrail, you can build workflows that allow different LLMs to collaborate on a problem, with each model focusing on what it does best. In a previous article, we built a full multi-agent system. Here’s a quick recap as it also leverages different LLMs for each task.

As you know, I often cover new papers and techniques in the field on the channel. A part of what I do is to post on LinkedIn or Twitter to summarize the key insights from the papers. It requires me to read the paper, understand what the researchers did, if it’s really relevant, and synthesize it all into clear bullet points.

Of course, ChatGPT can do a good job at it if you send the paper, but it often requires multiple back-and-forths.

Then, we want to have a version for LinkedIn and another for Twitter to work with threads of maximum lengths. It can be done again with ChatGPT, but it still requires some back-and-forth.

And then we’d like a nice cute or funny image, why not? Again more back and forth with Dalle or another image generator of your choice.

Instead, I replicated this process in one multi-agent workflow that simply needs the PDF as inputs and generates the rest individually. Here, I just put the text for simplicity, but you can build a system that is as complex as you want. It goes through the first agent, which is Gemini for longer context, which extracts the relevant text from the paper. Then we have 3 other agents in charge of making the posts and the images with specific prompts each. I used GPT-4o and Dalle for those, but Claude would’ve been interesting as well to try, and likewise for the image generation model, which you can all easily pick right from the Integrail studio.

This would’ve been impossible with just one chat system or less robust and much more complex with lots of back and forth using a single LLM.

Conclusion

So, next time you have a task at hand, don’t just reach for ChatGPT by default. Think about what you need — accuracy, speed, cost-effectiveness — and pick the right LLM accordingly. If the task is redundant, consider building a more complex agentic system to automate much of the process, which we cover in-depth in our other article with Integrail.

Always remember to use multiple evaluations like Big-Bench, Truthful QA, MMLU, Word-in-Context, the LMSys Arena, and Integrail’s custom benchmark for your task.

Thanks for reading, and if you found this useful, don’t forget to subscribe for more AI insights. I’ll see you in the next one!