How FlashMLA Cuts KV Cache Memory to 6.7%

DeepSeek's Game-Changer for LLM Efficiency

Watch the video!

Good morning everyone! This is Louis-François from Towards AI, and if you’ve watched my previous videos on embeddings, Mixture of Experts, infinite context attention, or even my video on CAG, you know I’m always excited to dig deep into clever solutions that make large language models run better and faster.

Well, hold onto your seat because DeepSeek released a new technique that does exactly that — FlashMLA.

Let’s explore what it is, why it matters, and how it builds on key innovations we’ve already discussed.

To understand FlashMLA, let’s start with a key concept in transformers: the KV cache. Every time a language model predicts the next word in a sentence, it needs to decide which parts of the text are most relevant.

In a transformer-based model, “attention” is the mechanism that figures out which parts of the text are most relevant, deciding which words deserve more focus to predict the next token.

To implement this, the model generates three sets of vectors — Query, Key, and Value (Q, K, and V). Think of the Query as a search request asking, “What information is important for this word?” while the Keys and Values capture the details of everything the model has already processed. Instead of recalculating these vectors for every token, the model stores them in the KV cache, which speeds up future lookups and saves on repetitive tokenization and embedding work. (By the way, I have a whole video on CAG if you’re interested!)

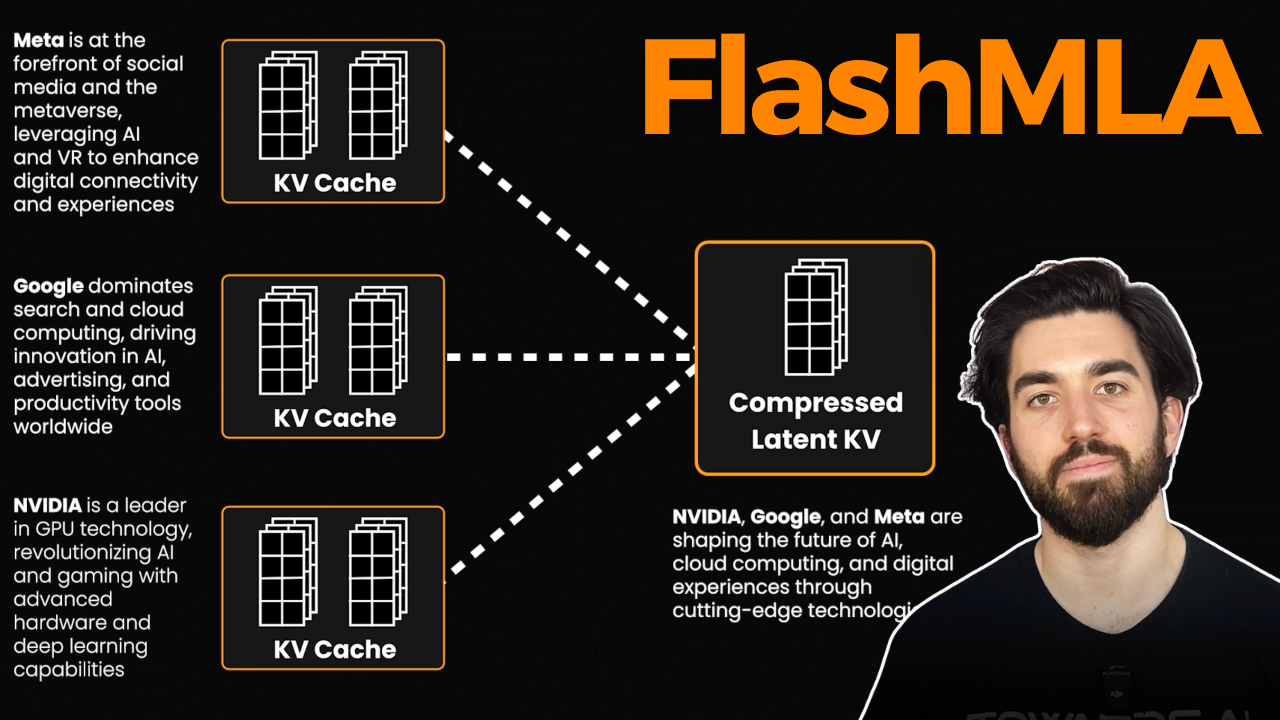

But there’s a challenge: as the context grows, so does the KV cache — and it can quickly eat up massive amounts of memory. This happens because transformers don’t use just one attention mechanism; they employ multi-head attention. Each head examines the text from a different angle — one might track subject-verb relationships, another might focus on synonyms, and yet another might capture sentence structure. While this multi-head approach enriches the model’s understanding, it also means each head requires its own set of KV pairs. As a result, when processing longer contexts or handling real-time text, these separate Keys and Values multiply, leading to enormous memory demands.

This is where Multi-head Latent Attention (MLA) came in. Instead of storing detailed Keys and Values for every token, MLA compresses attention history into compact “latent” representations. The idea is to maintain context without holding onto every last detail, reducing the memory load. It’s very much like how infini-attention, which we covered a few months ago, handles big contexts with a clever way of avoiding the computational and memory pitfalls of standard self-attention. But MLA specifically targets the KV cache bottleneck by drastically cutting its size. It does this using a technique called low-rank compression, which reduces the amount of memory needed to store KV pairs without losing too much information. Instead of keeping a full-resolution memory of every Key and Value, MLA finds a more compact way to store the most important parts. This is similar to how image compression works: a high-quality photo takes up a lot of space, but you can compress it to a lower resolution while still preserving most of its details.

However, just like with images, there’s always a trade-off: the more we compress, the more details we risk losing. This is a crucial limitation to keep in mind.

So, where does FlashMLA come in? FlashMLA is DeepSeek’s open-source optimized implementation of this Multi-head Latent Attention. It rethinks the kernels running on Hopper GPUs to ensure MLA computations happen faster than a typical multi-head attention approach while still preserving accuracy. This means that as memory usage decreases, you don’t pay for it in slower performance. Think of it as layering the efficiency gains we might see from Mixture-of-Experts (which itself manages compute by routing tokens to specialized feed-forward subunits) and the convenience of storing partial computations (like in Cache-Augmented Generation), but now we’re applying that principle directly to the attention mechanism.

By compressing and accelerating MLA through FlashMLA, we can significantly boost GPU resource efficiency. FlashMLA employs a low-rank compression method for KV caching — reducing the KV cache size to roughly 6.7% of traditional methods (flashmla.net). This optimization helps mitigate the “lost-in-the-middle” issue by enabling longer contexts to be processed without overwhelming memory. However, while this compression improves speed and efficiency, it may lead to a loss of fine-grained details in tasks that require high precision. Furthermore, FlashMLA is specifically optimized for NVIDIA’s Hopper GPUs (like the H100 and H200) and currently supports only BF16 and FP16 precision, so its benefits might not extend to older hardware or scenarios demanding higher numerical accuracy.

So if you’re building memory-intensive applications — like a chatbot handling thousands of concurrent queries or a knowledge base that needs near-instant lookups — FlashMLA might be a game-changer. You can integrate it with your existing pipeline, and it’ll feel like you gave your GPU a major upgrade for free.

In the future, expect more LLMs to adopt these specialized forms of attention. Just like infini-attention tackled the full context scaling problem and CAG streamlined how we reuse context, FlashMLA reimagines how we store it. Combined with other breakthroughs — like advanced embedding strategies or MoE layers for more efficient parameter usage — this kind of memory innovation is a critical piece of the puzzle for next-generation AI.

And that’s it! FlashMLA shrinks your memory footprint while keeping performance crisp and quick. This is a big factor of why DeepSeek is so fast and powerful!

If you found this article helpful, share it with your friends in the AI space and stay tuned for more deep dives into the latest in AI research and development.

Thank you for reading!