Image Editing from Text Instructions: InstructPix2Pix

The hottest image editing AI, InstructPix2Pix, explained!

Watch the video

We know that AI can generate images; now, let’s edit them!

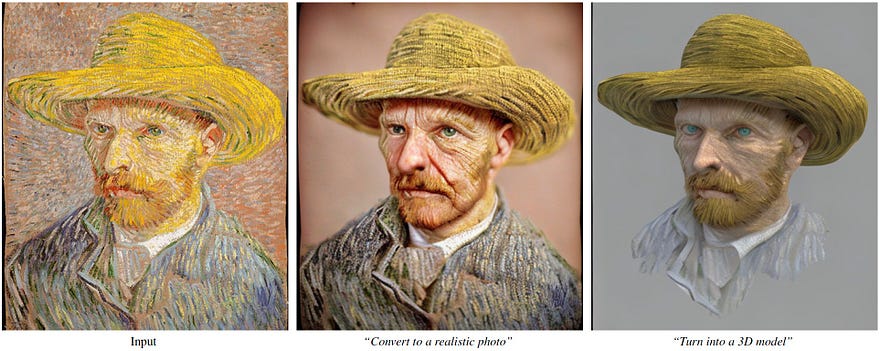

This new model called InstructPix2Pix does exactly that; it edits an image following a text-based instruction given by the user.

Just look at those amazing results… and that is not from OpenAI or google with an infinite budget.

It is a recent publication from Tim Brooks and collaborators at the University of California, including prof. Alexei A. Efros, a well-known figure in the computer vision industry.

As you can see, the results are just incredible.

Feel free to also try it yourself with the link below, as they shared a demo of the model on huggingface, which you can use for free!

What’s even more incredible is how they achieved those results. Let’s dive into it!

Image editing… More, image editing from text instructions. This is even more complex than image editing with graphical suggestions using some kind of sketch or segmentation on your image to orient your model.

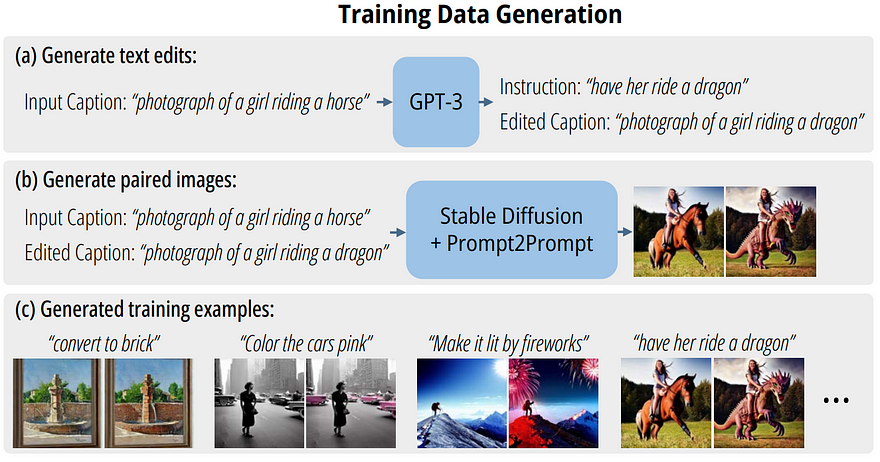

Here, we want to take an instruction and an image and automatically edit the image based on what’s being said. Your AI needs to understand both texts and then understand the image to know what to edit and how. But how does it do that? Well, they would typically need two models, one for language and one for images, then try to figure out a way to have both communicate and work well together. As I said, they used the popular models GPT-3 and Stable Diffusion. But these models are not in the final solution. So what did they do, and why did they need GPT-3 and Stable Diffusion?

They needed both models to build a powerful dataset appropriate for the task and use it to train a more specific model, simplifying the task by teaching only one model to perform such text-based edits instead of trying to use two general and powerful models for a very specific task. Indeed they used a version of the GPT-3 model to generate instructions and edit image captions with them.

These generated new captions are then sent to generate the image along with an edited version of it thanks to a third model, prompt-to-prompt, which can edit an image based on modifications of the text that was used to generate it. So at this point, we are using three powerful models that already have been trained in order to artificially create data for our new editing task: GPT-3 for generating text, Stable Diffusion for generating images, and finally, prompt-to-prompt for editing them based on the edited caption.

And we end up with our immense dataset of pairs of images along with simple instructions from our first step. More precisely, about half a million of such examples. We now only need one new model that could take an image and text as conditions to generate a new image, which would then learn from these examples to modify the initial image with the text to re-create the pair of images we see here. So it’s much more simple since our new, very specific model can just learn to copy-paste this editing process through what we call a supervised learning process. It doesn’t need to understand the images and the text. It just needs to understand that when the text says this, you need to edit this.

It will have somewhat of an understanding of the different concepts and will be super powerful to this specific editing task on data similar to the training set, but its level of understanding will be far from the individual models we discussed and even farther from our understanding. When you can't make it smarter, make it more specific!

This entirely artificially-created dataset is by far the coolest part of this project, but we still need a model to leverage those data.

The one in question here is called InstructPix2Pix.

And as I said, it’s available for free online if you’d like to try it.

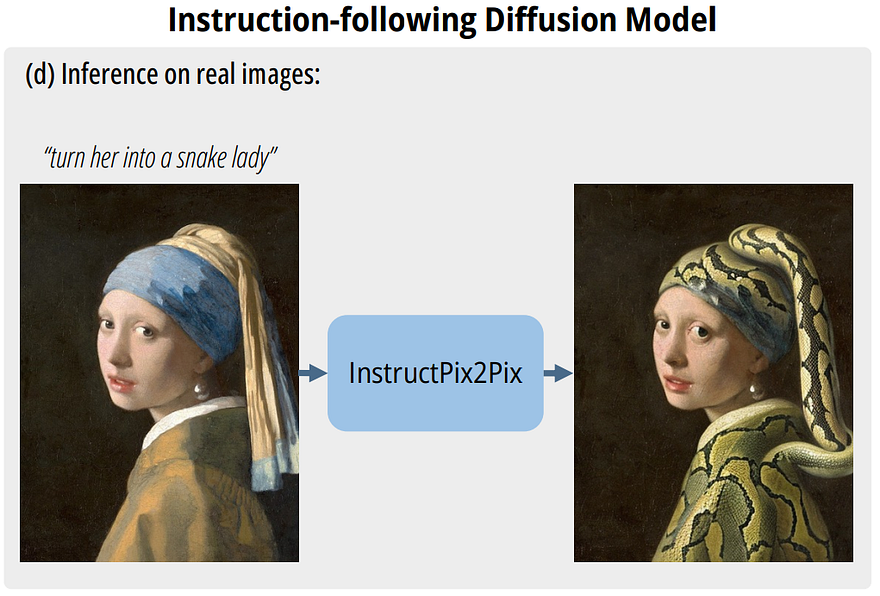

InstructPix2Pix is a new model based on diffusion, which is definitely not a surprise if you’ve been following the channel. This is the same kind of model used by DALLE, Stable Diffusion, and other recent image-based AIs.

Their model is based on none other than Stable Diffusion due to its power and high efficiency. I already covered the Stable Diffusion model in a video, which I will refer you to, but let’s see what they did differently here. Quickly, diffusion models use noise to generate images. Put simply, this means they will train a model to iteratively add noise, basically somewhat random values their model can control, to an initial set of random pixels in order to end with a real image. Sounds like magic, but it works because of the model’s training. This is thanks to a clever training process of taking image examples and randomly adding noise until the image is complete noise.

Here we don’t only want to generate an image, but we also want to control it with our initial image and only edit what our text says. To do that, they will lightly modify the existing Stable Diffusion architecture to allow for an initial image to be sent along with the text, as the Stable Diffusion architecture can already take text as conditions to generate images. Then, they carefully calibrate the training process for the model to respect both the image and our desired changes. It is finally trained by trial and error to replicate our paired image.

And voilà!

You get InstructPix2Pix, which allows you to edit your images with simple text instructions! The model is super powerful and generates images in seconds.

They also suggest the use of human-in-the-loop reinforcement learning, a very powerful technique that OpenAI recently used for ChatGPT, and you know where this lead.

Of course, this was just an overview of the approach, and I definitely invite you to try it yourself with their demo and learn more about their approach by reading the paper or with the code they made publicly available.

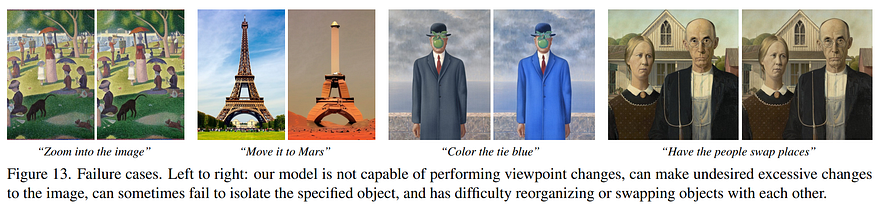

I’d love to know your thoughts on the results if you try it and where it seems to fail most often or succeed. Please, share the results with our community on Discord! All the links are in the description below.

I hope you’ve enjoyed this article!

Louis

References

►Brooks et al., 2022: InstructPix2Pix, https://arxiv.org/pdf/2211.09800.pdf

► Website: https://www.timothybrooks.com/instruct-pix2pix/

► Code: https://github.com/timothybrooks/instruct-pix2pix

► Demo to try it free: https://huggingface.co/spaces/timbrooks/instruct-pix2pix