The AI Monthly Top 3 - December 2021

The most interesting December's AI breakthroughs with video demos, short articles, code, and paper reference.

Here are the 3 most interesting research papers of the month, in case you missed any of them. It is a curated list of the latest breakthroughs in AI and Data Science by release date with a clear video explanation, link to a more in-depth article, and code (if applicable). Enjoy the read, and let me know if I missed any important papers in the comments, or by contacting me directly on LinkedIn!

If you’d like to read more research papers as well, I recommend you read my article where I share my best tips for finding and reading more research papers.

Follow me here or on Medium to see this AI top 3 monthly!

Paper #1:

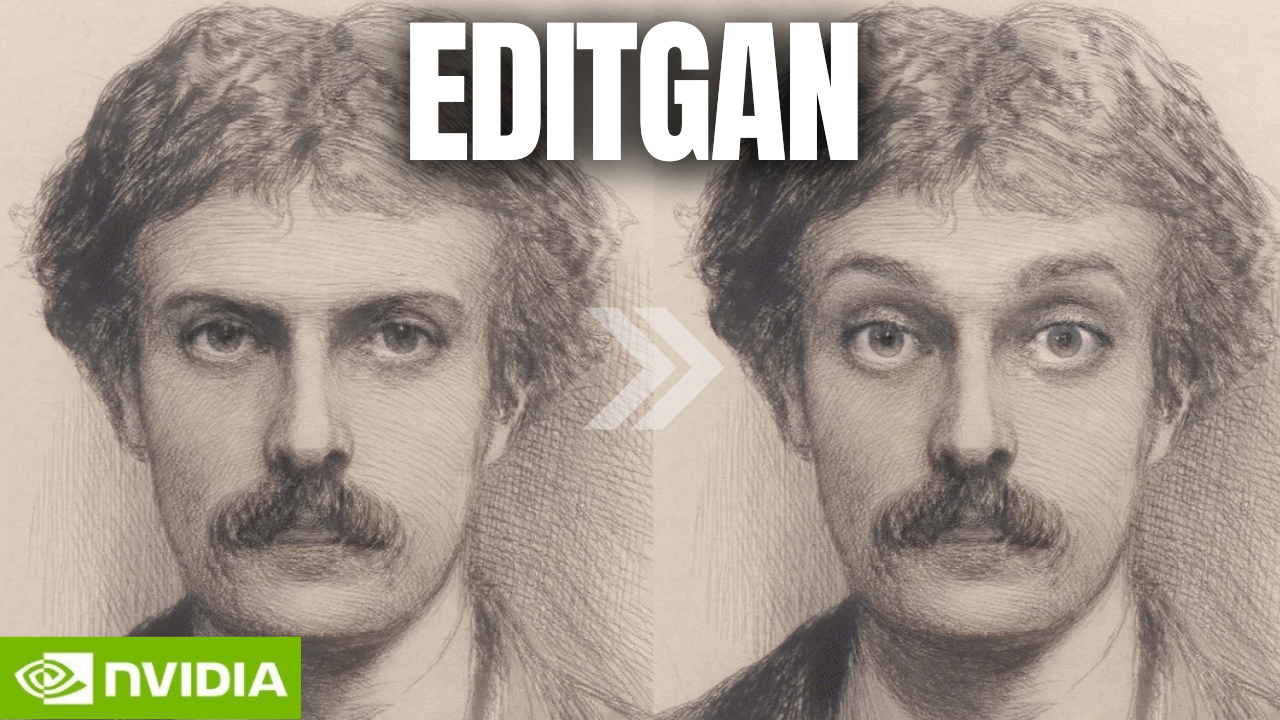

EditGAN: High-Precision Semantic Image Editing [1]

Control any feature from quick drafts, and it will only edit what you want keeping the rest of the image the same! SOTA Image Editing from sketches model based on GANs by NVIDIA, MIT and UofT.

Watch the video

A short read version

Paper #2:

CityNeRF: Building NeRF at City Scale [2]

The model is called CityNeRF and grows from NeRF, which I previously covered on my channel. NeRF is one of the first models using radiance fields and machine learning to construct 3D models out of images. But NeRF is not that efficient and works for a single scale. Here, CityNeRF is applied to satellite and ground-level images at the same time to produce various 3D model scales for any viewpoint. In simple words, they bring NeRF to city-scale. But how?

Watch the video

A short read version

Paper #3:

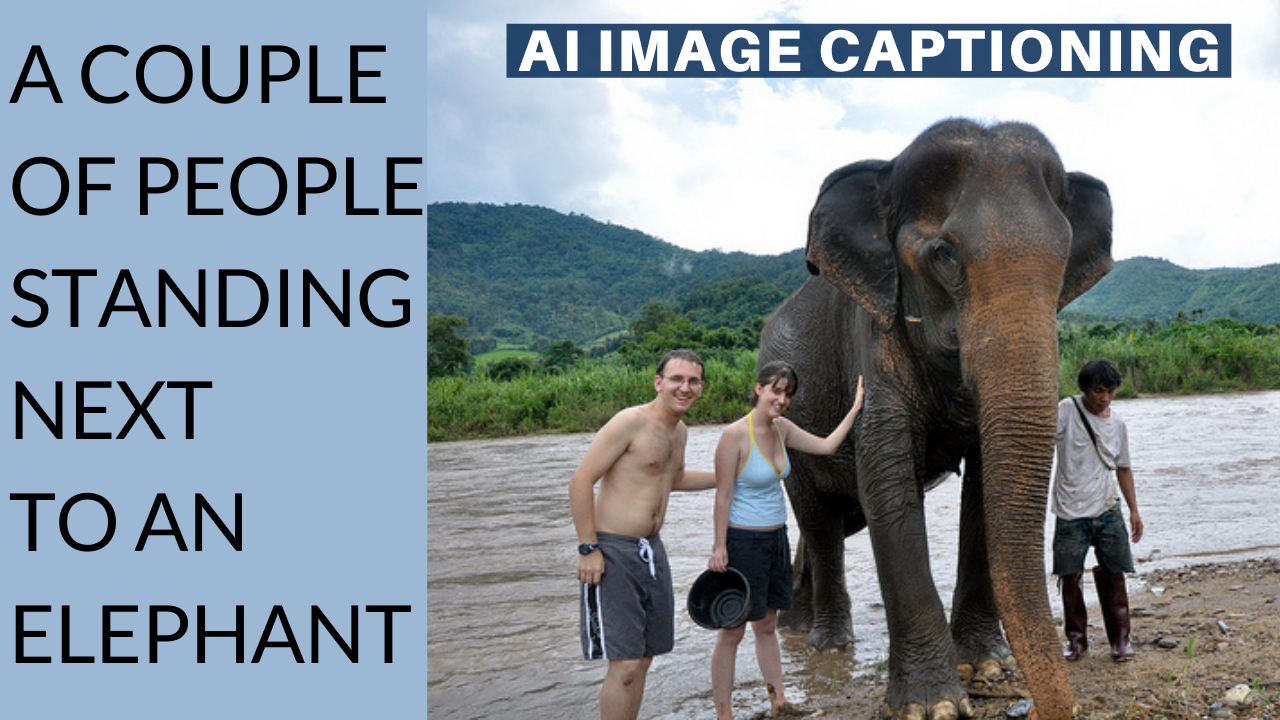

ClipCap: CLIP Prefix for Image Captioning [3]

We’ve seen AI generate images from other images using GANs. Then, there were models able to generate questionable images using text. In early 2021, DALL-E was published, beating all previous attempts to generate images from text input using CLIP, a model that links images with text as a guide. A very similar task called image captioning may sound really simple but is, in fact, just as complex. It is the ability of a machine to generate a natural description of an image.

It’s easy to simply tag the objects you see in the image but it is quite another challenge to understand what’s happening in a single 2-dimensional picture, and this new model does it extremely well!

Watch the video

A short read version

If you like my work and want to stay up-to-date with AI, you should definitely follow me on my other social media accounts (LinkedIn, Twitter) and subscribe to my weekly AI newsletter!

To support me:

- The best way to support me is by following me here on Medium or subscribing to my channel on YouTube if you like the video format.

- Support my work on Patreon.

- Join our Discord community: Learn AI Together and share your projects, papers, best courses, find Kaggle teammates, and much more!

- Here are the most useful tools I use daily as a research scientist for finding and reading AI research papers… Read more here.

References

[1] Ling, H., Kreis, K., Li, D., Kim, S.W., Torralba, A. and Fidler, S., 2021, May. EditGAN: High-Precision Semantic Image Editing. In Thirty-Fifth Conference on Neural Information Processing Systems.

[2] Xiangli, Y., Xu, L., Pan, X., Zhao, N., Rao, A., Theobalt, C., Dai, B. and Lin, D., 2021. CityNeRF: Building NeRF at City Scale.

[3] Mokady, R., Hertz, A. and Bermano, A.H., 2021. ClipCap: CLIP Prefix for Image Captioning. https://arxiv.org/abs/2111.09734